Paradoxical Insight: the AI ecosystem that’s perceived as less popular and less “frontier” (Google/Gemini) can end up capturing more economic value than the headline model leader (OpenAI/ChatGPT), because vertical integration turns rising compute demand into profits instead of costs.

A Tale of Two Complexes

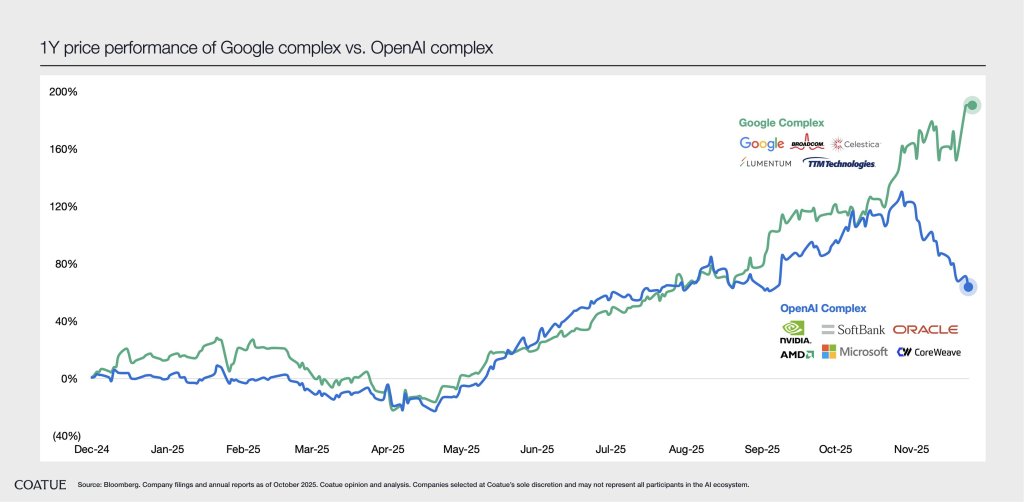

A recent X post by Philippe Laffont of Coatue highlighted the the price performance difference between the “Google Complex” of stocks significantly diverging from that of the “OpenAi Complex” of stocks. My initial thoughts on this divergence.

First, these two complexes refer to two distinct AI supply chains emerging.

Google Complex:

- Google $GOOGL – the vertically integrated AI platform.

- Broadcom $AVGO – TPU chip designer + custom silicon partner.

- Celestica $CLS – systems integrator for Google datacenters.

- Lumentum $LITE – optical components for Google datacenters.

- TTM Tech $TTMI – advanced PCBs and substrates for TPUs.

Google is finally showing the market: (1) real AI adoption via insider search, (2) a viable alternative to Nvidia dominance via TPUs, and (3) massive efficiency gains from owning its own chips, models, and distribution. The Google AI revenue play is more linear, more profitable, less dependent on external GPU bottlenecks, and is now being re-rated.

OpenAI Complex:

- OpenAI – frontier model developer + current AI demand engine.

- Nvidia $NVDA – primary GPU provider + proprietary AI ecosystem.

- AMD $AMD – secondary GPU provider + open-sourced ecosystem.

- Microsoft $MSFT – primary cloud + infra partner and distribution channel.

- Oracle $ORCL – AI data storage and primary enterprise AI provider.

- Coreweave $CRWV – primary GPU cloud provider.

- Softbank – large capital provider for AI ecosystem.

OpenAI gave birth and and significantly benefited from an AI ecosystem where it was the model leader – driving AI demand across every layer. This ecosystem is tied to OpenAI frontier models (every LLM it releases sets the bar for AI compute requirements), hyperscalers like Microsoft’s unprecedented AI capex cycle, GPU supply/demand tightness, and speculative upside in agentic workloads.

Because OpenAI lives and dies by GPU availability, model-provider demand, compute pricing, and hyperscaler competitive dynamics, any signal that a competitor (Google) has developed a viable and more efficient hardware-to-software loop (TPUs) can lead to meaningful corrections in OpenAI’s private valuation and its public peers ($NVDA).

Investment Implications

The two paradigms emerging is one where Google becomes a distribution-first AI company, while OpenAI continues to be a model-first AI company. At this late in this AI infra buildout, Google’s investment thesis becomes a more compelling investment thesis: higher visibility, better margins, lower volatility and more defensible moats (search, ads, hardware stack) then say a trillion-parameter SOTA LLM that really has no defensible moat other than access to more relevant training data.

If OpenAI is able to ship the next “step-function model” (something on the level of DeepSeek-R1) or agentic usage explodes, GPU demand will spike again and further validate OpenAI / Nvidia closed ecosystem. This test is not a matter of simple having the greatest number of AI talent – it’s about the quality of those engineers and thier motivation/talent to come up with the next ingenious LLM architecture.

However – and I suspect this to be the more plausible scenario – if Google builds on its TPU-software ecosystem and the AI developer community starts adopting it, and if it is able to start pushing its many services (Gmail, YouTube) to agentic frameworks and become a leader in agentic AI, then the GPU trade can structurally underperform for years as hyperscalers flock to adopting TPU /ASIC-based frameworks.

Base investment case:

- Overweight Google $GOOGL + Broadcom $AVGO (monetizable, less cylical)

- Selected $NVDA / $AMD via debt call spreads (convexity, risk-controlled)

- Tactical $NBIS (better play than $CRWV) / $ORCL if GPU utilization tigthens.

High-conviction bet:

- If you believe in the TPU thesis, long and levered the Google complex, hedge with selective Nvidia / $NVDA options.

- If you believe in perpetual GPU scarcity, lean into OpenAI complex, but accept there may be volatility due to structural changes in AI supply chains.

Bearing any monumental change in LLM architecture (including the launches of LWMs that may supersede LLM-only models), Broadcom $AVGO should stand to benefit as I believe hyperscalers will be soon realize LLM-based architecture performance is reaching a ceiling and if they want to monetize and be profitable in agentic workloads, they need to scale their hardware more efficiently using ASICs.

Leave a comment